"Face detection is a technique used to locate the position of a face within an image. The input to a face detection algorithm is typically an image, and the output is a list of face bounding box coordinates, which can be zero, one, or multiple faces depending on the scene. These bounding boxes are usually square-shaped and oriented upright, although some advanced techniques may output rotated rectangles to better fit the face's orientation.

The core process of most face detection algorithms involves scanning the image and then classifying candidate regions as faces. This means that the speed of the algorithm depends on factors such as image resolution and complexity. To optimize performance, developers often adjust parameters like input size, minimum face size, or maximum number of faces detected.

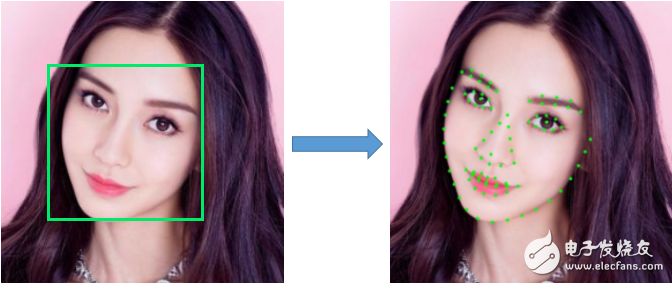

Figure 1: Example of face detection results (green box indicates the detected face).

Face registration, also known as facial landmark detection, is the process of identifying key points on a face, such as the eyes, nose, and mouth. The input to this algorithm includes a face image and its corresponding bounding box, while the output is a set of coordinates for these key points. Common configurations include 5-point, 68-point, or 90-point landmarks, depending on the application's needs.

Some modern face registration methods are based on deep learning frameworks. These algorithms first extract the face region from the detected bounding box, scale it to a fixed size, and then estimate the positions of the key points. As a result, the computation for face registration is generally faster than face detection.

Figure 2: Example of face registration result (green points indicate the detected facial landmarks).

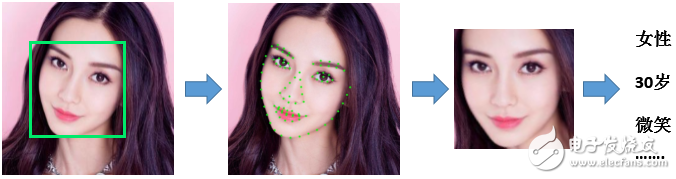

Face attribute recognition refers to the process of determining characteristics such as gender, age, pose, and expression of a face. The input to this algorithm usually consists of a face image and its aligned key point coordinates. The face is then normalized to a standard size and orientation before attribute analysis is performed.

Traditional approaches treat each attribute as a separate task, but recent deep learning models can recognize multiple attributes simultaneously. This makes the system more efficient and accurate in real-world applications.

Figure 3: Face attribute recognition process (the text on the right shows the recognized attributes).

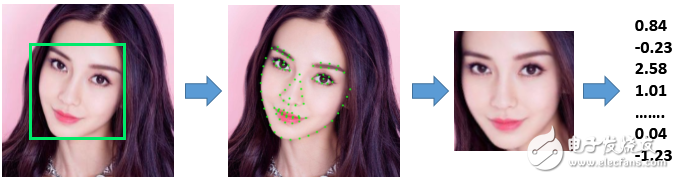

Face feature extraction is the process of converting a face image into a numerical representation, known as a face feature vector. This vector serves as a unique identifier for the face and is used in various applications such as face verification and recognition.

The input to this process includes a face image and its aligned key points. The face is then transformed into a standardized format, and features are extracted using either traditional methods or deep learning models. Recent advancements have made it possible to deploy lightweight and fast face feature extraction models on mobile devices.

Figure 4: Face feature extraction process (the value string on the right represents the face feature).

Face comparison, including face verification, recognition, retrieval, and clustering, is the process of measuring the similarity between two face features. This is commonly used in security systems, identity verification, and social media applications. The algorithm calculates a similarity score, which determines whether the two faces belong to the same person or not."

ZHOUSHAN JIAERLING METER CO.,LTD , https://www.zsjrlmeter.com